How AWS Lambda Runs Your Code

A breakdown of the surprisingly simple Lambda Runtime API

For the past few weeks, I've been working on an update to Serverless Stack (SST) that contains some major changes to how your Lambda functions are executed locally.

While I generally encourage using real AWS services as much as possible during development, SST enables local execution of functions so that code changes are reflected instantly without waiting for a full upload to AWS.

To keep this in line with how things work in production, we closely mirror how AWS invokes your code. This might seem like a lot of work but is actually a fairly simple protocol that we'll learn about in this post.

The Lambda Runtime API

When your code is being run in AWS, it has access to the Lambda Runtime API. This is a fairly simple API that exposes the following endpoints:

GET /runtime/invocation/next

POST /runtime/invocation/

POST /runtime/invocation/

The Flow

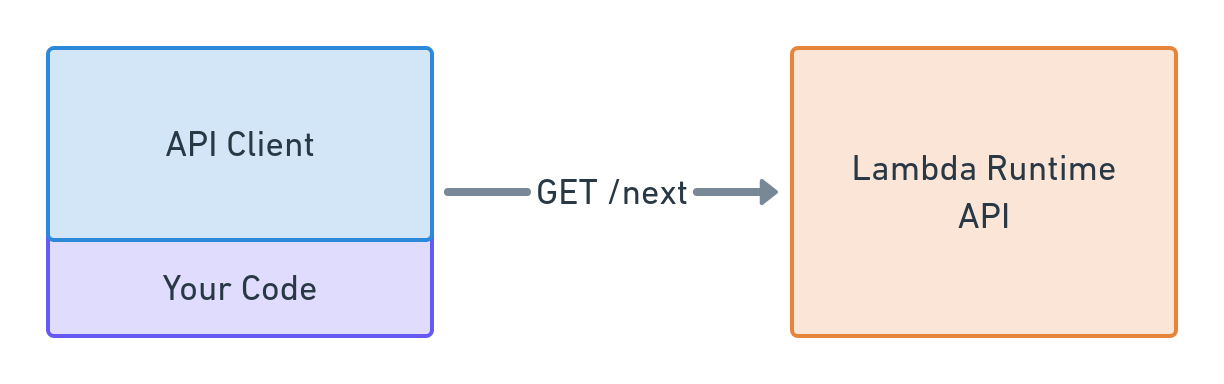

When your function boots up, it runs an API client specific to the language you are using. This client will load your handler into its process and then make a request to the /runtime/invocation/next endpoint.

This endpoint will block until there is a request that needs to be handled by your Lambda. Once this happens, it returns the payload that needs to be sent to your lambda.

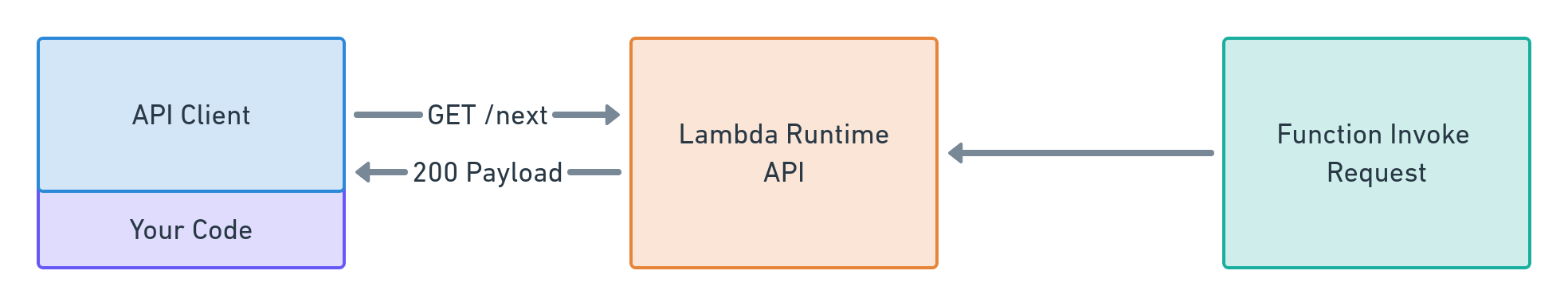

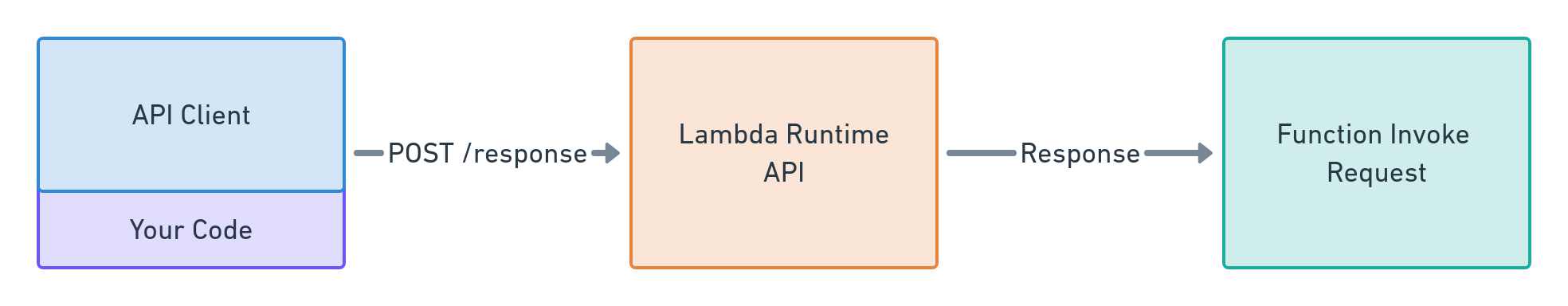

Now that the API client has the payload, it will call your handler passing the payload in as an argument.

If your handler succeeds, it will POST the result to /runtime/invocation/

If your handler fails, it will POST the error to /runtime/invocation/

The Lambda Runtime API will take care of forwarding the result to where it needs to go. The API Client now starts the cycle over again by making a request to /runtime/invocation/nextNote, this time it doesn't need to import your handler again which is why cold starts do not happen with every invocation.

And that's it! The protocol is that simple and recreating it just means implementing those 3 endpoints.

How we fake it

In SST, your functions are executing locally so they need a local Lambda Runtime API available. We provide a fake version that emulates the same three endpoints and connects to your AWS account over websocket.

When a request to invoke a function comes in, it is forwarded to the websocket and through the /runtime/invocation/next endpoint to your local code. The response is then sent back to AWS.

This is how we mirror the production environment without requiring your code to be uploaded on every change. Your code cannot tell it isn't running in AWS because it's able to find the Lambda Runtime API it's looking for.

Lambda Runtime Clients

Since SST supports multiple languages you might think we had to recreate the API client for every language. However, AWS actually open sources these clients for the various languages they support natively. Some examples: NodeJS, Go, and .NET

These clients even follow a standard of accepting an AWS_LAMBDA_RUNTIME_API environment variable so we can point them to the local instance. It's like AWS wanted us to do this.

If you want to add support for a new language, all it takes is writing an API client that can talk to those 3 endpoints and run your code. You can even write it in bash if you want which is what this Deno implementation does

Well that's unimpressive

Hopefully understanding how all this works and how simple it is doesn't leave you feeling too unimpressed with the work we're doing on SST.

We intentionally make sure SST adds as little as possible when running things locally to ensure everything keeps behaving the same as production. Cloud-first development is the way to go and this is a small exception to make that experience smoother.